|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

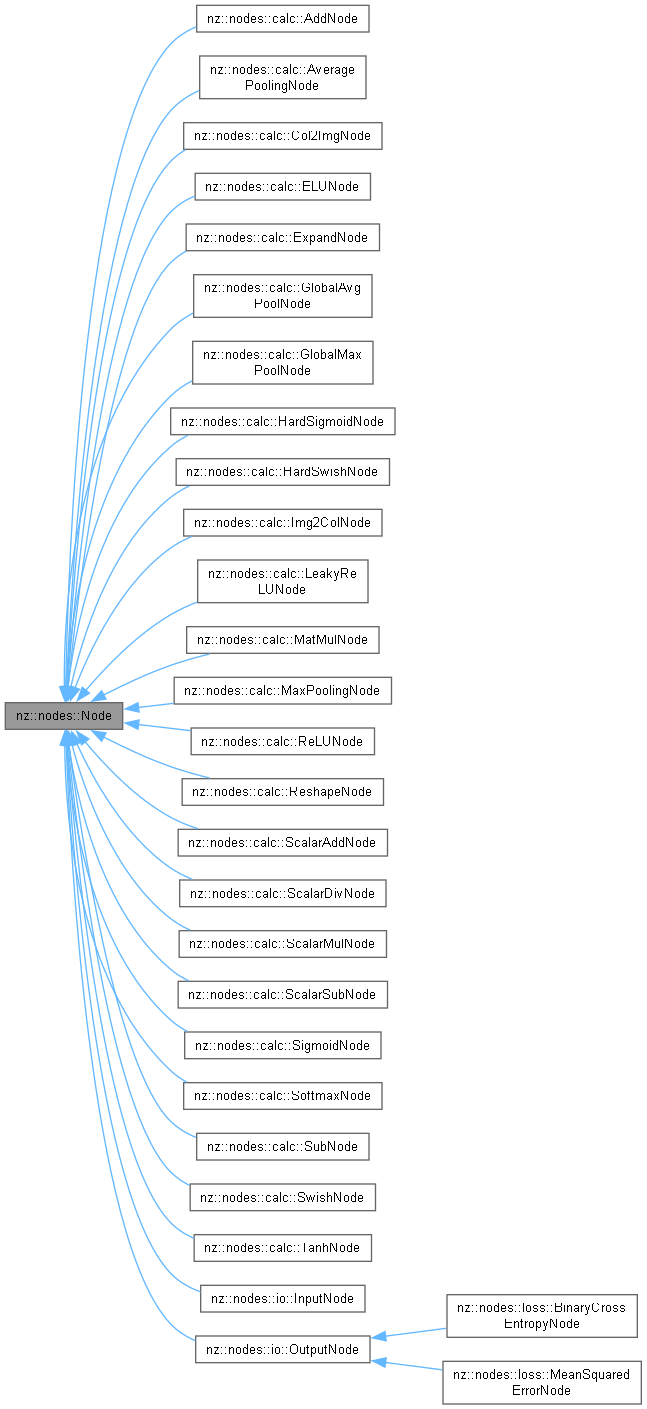

Base class for nodes in a neural network or computational graph. More...

Public Member Functions | |

| virtual void | forward ()=0 |

| Abstract method for the forward pass computation. | |

| virtual void | backward ()=0 |

| Abstract method for the backward pass (gradient computation). | |

| virtual void | print (std::ostream &os) const |

| Prints the type, data, and gradient of the node. | |

| void | dataInject (Tensor::value_type *data, bool grad=false) const |

| Injects data into a relevant tensor object, optionally setting its gradient requirement. | |

| template<typename Iterator > | |

| void | dataInject (Iterator begin, Iterator end, const bool grad=false) const |

| Injects data from an iterator range into the output tensor of the InputNode, optionally setting its gradient requirement. | |

| void | dataInject (const std::initializer_list< Tensor::value_type > &data, bool grad=false) const |

| Injects data from a std::initializer_list into the output tensor of the Node, optionally setting its gradient requirement. | |

Base class for nodes in a neural network or computational graph.

The Node class serves as an abstract base class for all types of nodes in a computational graph, commonly used in neural networks. Each node represents an operation or a layer in the graph, with input and output connections that allow data to flow through the network. The forward() and backward() methods define the computations to be performed during the forward and backward passes of the network, respectively.

This class is designed to be subclassed and extended for specific layers or operations. Derived classes are required to implement the forward() and backward() methods to define the specific computations for each node.

Key features:

Tensor object that stores the result of this node's computation.forward() and backward() that must be implemented by derived classes to perform the forward and backward propagation steps of the neural network.This class is part of the nz::nodes namespace, and is intended to be used as a base class for defining custom layers or operations in a neural network.

forward() and backward() functions to define the specific computations for the node.

|

pure virtual |

Abstract method for the backward pass (gradient computation).

The backward() method is a pure virtual function in the Node class, which must be implemented by derived classes. It is responsible for computing the gradients during the backward pass of the neural network or computational graph, which is used for backpropagation in training.

During the backward pass, the error gradients are propagated backward through the network, from the output nodes to the input nodes. Each node computes the gradient of its output with respect to its input, using the chain rule of calculus, to update the weights or parameters of the network.

Derived classes that represent specific layers or operations must implement this method to define how gradients are calculated for that particular layer or operation.

backward() method must be implemented by any class derived from Node. It should compute the gradient of the output with respect to the node's input and store it in the node's grad tensor.Implemented in nz::nodes::calc::AddNode, nz::nodes::calc::AveragePoolingNode, nz::nodes::calc::Col2ImgNode, nz::nodes::calc::ELUNode, nz::nodes::calc::ExpandNode, nz::nodes::calc::GlobalAvgPoolNode, nz::nodes::calc::GlobalMaxPoolNode, nz::nodes::calc::HardSigmoidNode, nz::nodes::calc::HardSwishNode, nz::nodes::calc::Img2ColNode, nz::nodes::calc::LeakyReLUNode, nz::nodes::calc::MatMulNode, nz::nodes::calc::MaxPoolingNode, nz::nodes::calc::ReLUNode, nz::nodes::calc::ReshapeNode, nz::nodes::calc::ScalarAddNode, nz::nodes::calc::ScalarDivNode, nz::nodes::calc::ScalarMulNode, nz::nodes::calc::ScalarSubNode, nz::nodes::calc::SigmoidNode, nz::nodes::calc::SoftmaxNode, nz::nodes::calc::SubNode, nz::nodes::calc::SwishNode, nz::nodes::calc::TanhNode, nz::nodes::io::InputNode, nz::nodes::io::OutputNode, nz::nodes::loss::BinaryCrossEntropyNode, and nz::nodes::loss::MeanSquaredErrorNode.

| void nz::nodes::Node::dataInject | ( | const std::initializer_list< Tensor::value_type > & | data, |

| bool | grad = false ) const |

Injects data from a std::initializer_list into the output tensor of the Node, optionally setting its gradient requirement.

| data | A std::initializer_list containing the data to be injected into the output tensor (host-to-device). |

| grad | A boolean indicating whether the output tensor should require gradient computation after data injection. |

This function is responsible for injecting data from a std::initializer_list into the output tensor of the Node. Memory management is handled by the underlying dataInject method of the Tensor class. The output tensor is assumed to have already allocated enough memory to accommodate the data in the std::initializer_list.

Regarding exception handling, this function does not explicitly catch any exceptions. Exceptions that might occur during data injection, such as memory allocation errors in the Tensor class, will propagate to the caller.

This function acts as a bridge between the Node and its output tensor, allowing data to be easily provided using a std::initializer_list.

| None | explicitly, but the dataInject method of the Tensor class may throw exceptions, such as std::bad_alloc if memory allocation fails during the injection process. |

std::initializer_list contains enough elements to fill the output tensor according to its shape.std::initializer_list, as it involves copying data from the list into the tensor.

|

inline |

Injects data from an iterator range into the output tensor of the InputNode, optionally setting its gradient requirement.

| Iterator | The type of the iterators used to define the data range. It should support the standard iterator operations like dereferencing and incrementing. |

| begin | An iterator pointing to the beginning of the data range (host-to-device). The data in this range will be injected into the output tensor. |

| end | An iterator pointing to the end of the data range (host-to-device). |

| grad | A boolean indicating whether the output tensor should require gradient computation after data injection. Defaults to false. |

This template function is used to inject data from an iterator range into the output tensor of the InputNode. Memory management is handled by the underlying dataInject method of the Tensor class. It is assumed that the output tensor has already allocated sufficient memory to hold the data from the iterator range.

Regarding exception handling, this function does not explicitly catch any exceptions. Exceptions that might occur during data injection, such as iterator invalidation or memory allocation errors in the Tensor class, will propagate to the caller.

This function serves as a wrapper around the dataInject method of the output tensor, facilitating the use of iterators to provide data for injection.

| None | explicitly, but the dataInject method of the Tensor class may throw exceptions, such as std::bad_alloc if memory allocation fails during the injection process. |

[begin, end) is valid and that the data type pointed to by the iterators is compatible with the Tensor::value_type.| void nz::nodes::Node::dataInject | ( | Tensor::value_type * | data, |

| bool | grad = false ) const |

Injects data into a relevant tensor object, optionally setting its gradient requirement.

| data | A pointer to the data to be injected into the tensor (host-to-device). This data will be used to populate the tensor. |

| grad | A boolean indicating whether the tensor should require gradient computation after data injection. Defaults to false. |

This function is designed to inject data into a tensor object. Memory management within this function is handled by the underlying tensor operations. It is assumed that the tensor object has already allocated the necessary memory to hold the data pointed to by data.

Regarding exception handling, this function does not explicitly catch any exceptions. Exceptions that might occur during data injection, such as memory access errors or CUDA errors (if applicable), will propagate to the caller.

This function likely interacts with other components related to the tensor, such as the computation graph or the gradient computation system, depending on the value of grad.

| None | explicitly, but underlying tensor operations may throw exceptions, such as std::bad_alloc if memory allocation fails during the injection process. |

data pointer is valid and points to enough data to fill the target tensor.

|

pure virtual |

Abstract method for the forward pass computation.

The forward() method is a pure virtual function in the Node class, which must be implemented by derived classes. It is responsible for performing the computation during the forward pass of the neural network or computational graph.

In the forward pass, data flows through the network from input nodes to output nodes, and each node performs its specific computation (e.g., activation, matrix multiplication, etc.) based on the data it receives as input.

Derived classes that represent specific layers or operations (such as activation functions, convolution layers, etc.) must implement this method to define the exact computation to be performed for that layer.

Implemented in nz::nodes::calc::AddNode, nz::nodes::calc::AveragePoolingNode, nz::nodes::calc::Col2ImgNode, nz::nodes::calc::ELUNode, nz::nodes::calc::ExpandNode, nz::nodes::calc::GlobalAvgPoolNode, nz::nodes::calc::GlobalMaxPoolNode, nz::nodes::calc::HardSigmoidNode, nz::nodes::calc::HardSwishNode, nz::nodes::calc::Img2ColNode, nz::nodes::calc::LeakyReLUNode, nz::nodes::calc::MatMulNode, nz::nodes::calc::MaxPoolingNode, nz::nodes::calc::ReLUNode, nz::nodes::calc::ReshapeNode, nz::nodes::calc::ScalarAddNode, nz::nodes::calc::ScalarDivNode, nz::nodes::calc::ScalarMulNode, nz::nodes::calc::ScalarSubNode, nz::nodes::calc::SigmoidNode, nz::nodes::calc::SoftmaxNode, nz::nodes::calc::SubNode, nz::nodes::calc::SwishNode, nz::nodes::calc::TanhNode, nz::nodes::io::InputNode, nz::nodes::io::OutputNode, nz::nodes::loss::BinaryCrossEntropyNode, and nz::nodes::loss::MeanSquaredErrorNode.

|

virtual |

Prints the type, data, and gradient of the node.

The print() method outputs the information about the node, including its type, the tensor data stored in the node's output, and the corresponding gradient. This is useful for debugging and inspecting the state of nodes in a computational graph or during training, allowing for easy visualization of the node's content and gradients.

The method outputs the following details:

output tensor.This method is primarily used for debugging and monitoring the state of tensors and gradients, making it easier to inspect how the data and gradients flow through the network.

output tensor should contain both the data and the gradient information, and both are printed when this method is called.| os | The output stream (e.g., std::cout) to which the node's information will be printed. |

Reimplemented in nz::nodes::io::OutputNode.