|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

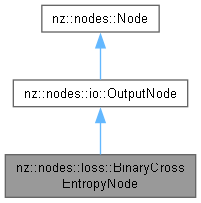

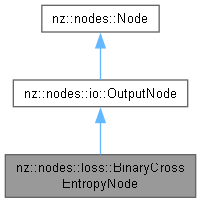

Represents the Binary Cross-Entropy (BCE) loss function node in a computational graph. More...

Public Member Functions | |

| BinaryCrossEntropyNode (Node *input1, Node *input2) | |

Constructor to initialize a BinaryCrossEntropyNode for computing the Binary Cross-Entropy loss. | |

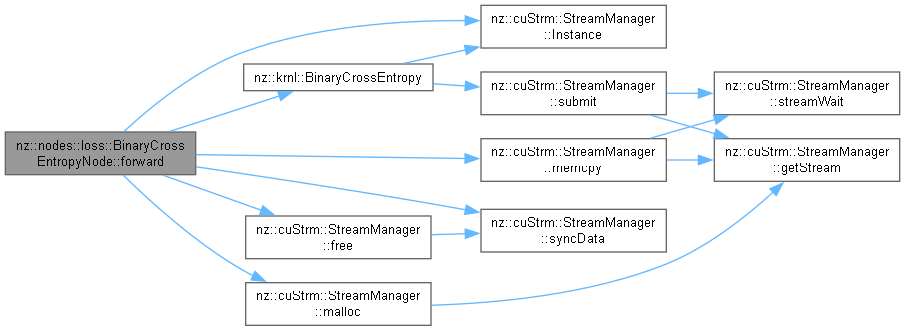

| void | forward () override |

| Computes the Binary Cross-Entropy (BCE) loss in the forward pass. | |

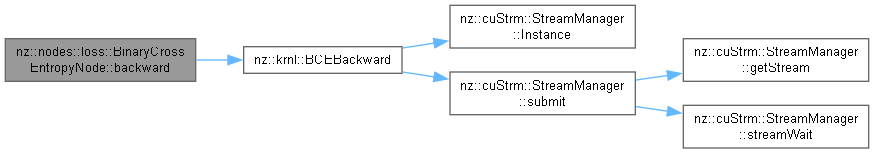

| void | backward () override |

| Computes the gradients of the Binary Cross-Entropy (BCE) loss with respect to the inputs during the backward pass. | |

Public Member Functions inherited from nz::nodes::io::OutputNode Public Member Functions inherited from nz::nodes::io::OutputNode | |

| OutputNode (Node *input) | |

Constructor to initialize an OutputNode with a given input node. | |

| void | forward () override |

Forward pass for the OutputNode. | |

| void | backward () override |

Backward pass for the OutputNode. | |

| Tensor::value_type | getLoss () const |

Retrieves the loss value stored in the OutputNode. | |

| void | print (std::ostream &os) const override |

| Prints the type, data, gradient, and loss of the node. | |

Public Member Functions inherited from nz::nodes::Node Public Member Functions inherited from nz::nodes::Node | |

| void | dataInject (Tensor::value_type *data, bool grad=false) const |

| Injects data into a relevant tensor object, optionally setting its gradient requirement. | |

| template<typename Iterator > | |

| void | dataInject (Iterator begin, Iterator end, const bool grad=false) const |

| Injects data from an iterator range into the output tensor of the InputNode, optionally setting its gradient requirement. | |

| void | dataInject (const std::initializer_list< Tensor::value_type > &data, bool grad=false) const |

| Injects data from a std::initializer_list into the output tensor of the Node, optionally setting its gradient requirement. | |

Represents the Binary Cross-Entropy (BCE) loss function node in a computational graph.

The BinaryCrossEntropyNode class computes the Binary Cross-Entropy loss between two input tensors. BCE is typically used in binary classification tasks to measure the difference between predicted probabilities and the true binary labels. The loss is calculated as:

where x represents the predicted probabilities (output of the model), and y represents the true binary labels (either 0 or 1). The loss is computed element-wise for each pair of corresponding values in the tensors.

Key features:

This class is part of the nz::nodes namespace and is used in models for binary classification tasks.

BinaryCrossEntropyNode requires two input nodes: the predicted probabilities (input1) and the true binary labels (input2).forward() method computes the BCE loss on the GPU, and the backward() method computes the gradients of the BCE loss.loss attribute, which is updated during the forward pass.grad attribute of the output tensor during the backward pass.

|

explicit |

Constructor to initialize a BinaryCrossEntropyNode for computing the Binary Cross-Entropy loss.

The constructor initializes a BinaryCrossEntropyNode, which applies the Binary Cross-Entropy loss function to two input tensors. It verifies that both input tensors have the same shape and establishes a connection in the computational graph by storing the second input tensor. The node's type is set to "BinaryCrossEntropy".

| input1 | A pointer to the first input node. This tensor represents the predicted probabilities. |

| input2 | A pointer to the second input node. This tensor represents the true binary labels (0 or 1). |

| std::invalid_argument | If the shapes of the two input tensors do not match. |

inputs vector.

|

overridevirtual |

Computes the gradients of the Binary Cross-Entropy (BCE) loss with respect to the inputs during the backward pass.

This method computes the gradients of the Binary Cross-Entropy loss with respect to both input tensors (input1 and input2). The gradients are computed only if the output tensor requires gradients (i.e., during the backpropagation process). The gradients are propagated back to the input nodes to update their weights during training.

The gradient of Binary Cross-Entropy with respect to the predicted probabilities (y_pred) is computed as:

where y_pred is the predicted probability and y_true is the true binary label (0 or 1).

The gradient computation is parallelized on the GPU using CUDA, enabling efficient backpropagation even with large datasets.

grad attribute of the output tensor, which is propagated to the input nodes during backpropagation.requiresGrad() set to true, ensuring that gradients are computed only when necessary.y_pred) are accumulated in the grad attribute of the output tensor.Implements nz::nodes::Node.

Definition at line 842 of file Nodes.cu.

|

overridevirtual |

Computes the Binary Cross-Entropy (BCE) loss in the forward pass.

This method computes the Binary Cross-Entropy loss between the predicted probabilities (from the first input tensor) and the true binary labels (from the second input tensor). The loss is calculated element-wise and accumulated. The result is stored in the loss attribute, which can be accessed after the forward pass.

The Binary Cross-Entropy loss is computed as:

where y_pred is the predicted probability and y_true is the true label (0 or 1).

The calculation is done in parallel on the GPU using CUDA to handle large tensor sizes efficiently.

loss attribute.loss attribute will hold the accumulated Binary Cross-Entropy loss after the forward pass.Implements nz::nodes::Node.

Definition at line 822 of file Nodes.cu.