|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

Represents the Mean Squared Error (MSE) loss function node in a computational graph. More...

Public Member Functions | |

| MeanSquaredErrorNode (Node *input1, Node *input2) | |

Constructor to initialize a MeanSquaredErrorNode for computing the Mean Squared Error (MSE) loss. | |

| void | forward () override |

| Computes the forward pass of the Mean Squared Error (MSE) loss function. | |

| void | backward () override |

| Computes the backward pass of the Mean Squared Error (MSE) loss function. | |

Public Member Functions inherited from nz::nodes::io::OutputNode Public Member Functions inherited from nz::nodes::io::OutputNode | |

| OutputNode (Node *input) | |

Constructor to initialize an OutputNode with a given input node. | |

| void | forward () override |

Forward pass for the OutputNode. | |

| void | backward () override |

Backward pass for the OutputNode. | |

| Tensor::value_type | getLoss () const |

Retrieves the loss value stored in the OutputNode. | |

| void | print (std::ostream &os) const override |

| Prints the type, data, gradient, and loss of the node. | |

Public Member Functions inherited from nz::nodes::Node Public Member Functions inherited from nz::nodes::Node | |

| void | dataInject (Tensor::value_type *data, bool grad=false) const |

| Injects data into a relevant tensor object, optionally setting its gradient requirement. | |

| template<typename Iterator > | |

| void | dataInject (Iterator begin, Iterator end, const bool grad=false) const |

| Injects data from an iterator range into the output tensor of the InputNode, optionally setting its gradient requirement. | |

| void | dataInject (const std::initializer_list< Tensor::value_type > &data, bool grad=false) const |

| Injects data from a std::initializer_list into the output tensor of the Node, optionally setting its gradient requirement. | |

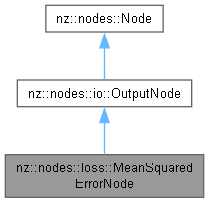

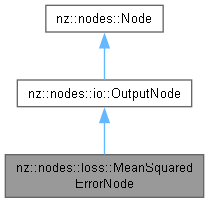

Represents the Mean Squared Error (MSE) loss function node in a computational graph.

The MeanSquaredErrorNode class computes the Mean Squared Error loss between two input tensors. The loss is calculated as the average of the squared differences between the corresponding elements of the two tensors:

where x and y are the two input tensors, and n is the number of elements in the tensors. This class is typically used for training models, especially in regression tasks.

Key features:

This class is part of the nz::nodes namespace and is useful in models where Mean Squared Error is the loss function.

MeanSquaredErrorNode requires two input nodes, both of which must have tensors of the same shape.loss attribute, which is updated during the forward pass.grad attribute of the input tensors during the backward pass.

|

explicit |

Constructor to initialize a MeanSquaredErrorNode for computing the Mean Squared Error (MSE) loss.

The constructor initializes a MeanSquaredErrorNode, which computes the MSE loss between two input nodes. It checks that both input nodes have the same shape and sets up the necessary internal structures for the loss calculation.

| input1 | A pointer to the first input node. The output tensor of this node will be used as the predicted values. |

| input2 | A pointer to the second input node. The output tensor of this node will be used as the ground truth values. |

| std::invalid_argument | if input1 and input2 do not have the same shape. |

input2) is added to the inputs vector, while the first input node is inherited from the OutputNode base class.type attribute is set to "MeanSquaredError", indicating the type of operation this node represents.

|

overridevirtual |

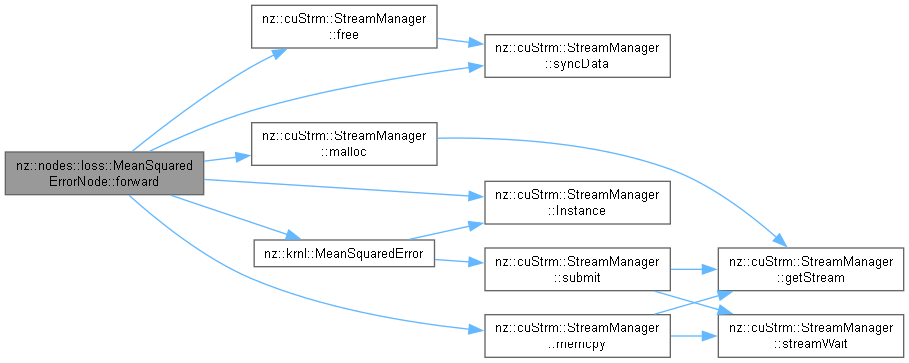

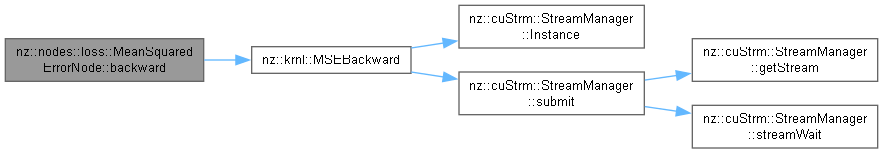

Computes the backward pass of the Mean Squared Error (MSE) loss function.

This method computes the gradients of the MSE loss with respect to the input tensors (inputs[0] and inputs[1]). The gradients are calculated only if the output tensor requires gradients, and they are used for backpropagation in training deep learning models.

The backward pass is performed using a CUDA kernel to efficiently compute the gradients in parallel on the GPU.

requiresGrad() on the output.MSEBackward) is launched to compute the gradients of the loss with respect to both input tensors.inputs[0]) and the ground truth values (inputs[1]) in a parallel manner across all elements of the tensors.grad attribute of the output tensor.requiresGrad() check ensures that gradients are only computed if necessary, avoiding unnecessary computations.Implements nz::nodes::Node.

Definition at line 804 of file Nodes.cu.

|

overridevirtual |

Computes the forward pass of the Mean Squared Error (MSE) loss function.

This method calculates the Mean Squared Error (MSE) loss between two input tensors. The loss is computed by comparing the predicted values (inputs[0]) to the ground truth values (inputs[1]) element-wise. The result is accumulated in the loss attribute of the node.

The computation is performed in parallel on the GPU using CUDA kernels for efficiency.

forward() method of the OutputNode base class to handle any initialization and setup required by the base class.loss attribute.inputs vector must contain exactly two nodes: the predicted values and the ground truth values. Both tensors must have the same shape, and this is validated during the initialization.loss attribute, which is updated after each forward pass.Implements nz::nodes::Node.

Definition at line 785 of file Nodes.cu.