Implements the Softmax activation function as a node in a neural network computational graph.

More...

|

| | SoftmaxNode (Node *input) |

| | Constructor to initialize a SoftmaxNode for applying the Softmax activation function.

|

| |

| void | forward () override |

| | Performs the forward pass of the Softmax operation.

|

| |

| void | backward () override |

| | Performs the backward pass of the Softmax operation.

|

| |

| virtual void | print (std::ostream &os) const |

| | Prints the type, data, and gradient of the node.

|

| |

| void | dataInject (Tensor::value_type *data, bool grad=false) const |

| | Injects data into a relevant tensor object, optionally setting its gradient requirement.

|

| |

| template<typename Iterator > |

| void | dataInject (Iterator begin, Iterator end, const bool grad=false) const |

| | Injects data from an iterator range into the output tensor of the InputNode, optionally setting its gradient requirement.

|

| |

| void | dataInject (const std::initializer_list< Tensor::value_type > &data, bool grad=false) const |

| | Injects data from a std::initializer_list into the output tensor of the Node, optionally setting its gradient requirement.

|

| |

Implements the Softmax activation function as a node in a neural network computational graph.

The SoftmaxNode class applies the Softmax activation function to the input tensor, transforming it into a probability distribution. This node is commonly used as the final layer in classification networks to convert raw scores into probabilities.

The Softmax function is defined as:

Softmax(x_i) = exp(x_i) / sum(exp(x_j))

void Softmax(dim3 gridDim, dim3 blockDim, float *out, float *in, float exp_sum_of_input, unsigned long long n, size_t offset=0)

Kernel function to apply the Softmax function on the GPU.

where x_i is the i-th element of the input vector and the sum is over all elements j.

Key features and characteristics:

- Probability Output: Transforms input into a probability distribution where all elements sum to 1.

- Numerically Stable: Implements a numerically stable version of Softmax to prevent overflow.

- Shape Preservation: The output tensor maintains the same shape as the input tensor.

- GPU Acceleration: Utilizes CUDA for efficient parallel computation on GPU.

- Gradient Computation: Supports backward pass for gradient calculation in neural network training.

- Precomputation Optimization: Precomputes exponential sum in the constructor for efficiency.

Implementation details:

- The constructor precomputes the sum of exponentials to optimize the forward pass.

- The forward pass applies the Softmax function using the precomputed sum.

- The backward pass computes the full Jacobian matrix for accurate gradient calculation.

- CUDA kernels are used for parallel computation in both forward and backward passes.

Use cases:

- Output layer of multi-class classification networks.

- Attention mechanisms in sequence-to-sequence models.

- Any scenario requiring normalization of a vector into a probability distribution.

Limitations and considerations:

- May suffer from underflow or overflow for extreme input values.

- The full Jacobian computation in backward pass can be memory-intensive for large outputs.

- Note

- This implementation assumes the input is a 1D or 2D tensor. For higher dimensions, consider using a dimension-specific Softmax implementation.

- The node automatically handles gradient tracking based on the input tensor's requirements.

- For very large inputs, consider using LogSoftmax for improved numerical stability.

- See also

- forward() for the Softmax computation in the forward pass.

-

backward() for gradient computation in the backward pass.

Usage Example:

InputNode input({1, 1, 1, 5}, true);

std::vector<float> logits{2.0f, 1.0f, 0.1f, 3.0f, -1.0f};

input.output->dataInject(logits.begin(), logits.end());

softmax.forward();

std::cout << "Probabilities: " << *softmax.output << std::endl;

softmax.backward();

SoftmaxNode(Node *input)

Constructor to initialize a SoftmaxNode for applying the Softmax activation function.

- Author

- Mgepahmge (https://github.com/Mgepahmge)

- Date

- 2024/12/5

Definition at line 3152 of file Nodes.cuh.

| nz::nodes::calc::SoftmaxNode::SoftmaxNode |

( |

Node * | input | ) |

|

|

explicit |

Constructor to initialize a SoftmaxNode for applying the Softmax activation function.

The constructor initializes a SoftmaxNode, which applies the Softmax activation function to an input tensor. It establishes a connection to the input node, initializes the output tensor, and sets up the node for Softmax computation.

- Parameters

-

| input | A pointer to the input node. Its output tensor will have the Softmax activation applied. |

The Softmax activation function is defined as:

Softmax(x_i) = exp(x_i) / sum(exp(x_j))

where x_i is the i-th element of the input vector and the sum is over all elements j.

Key operations performed by the constructor:

- Initializes the

sum member variable to 0, which may be used in future computations.

- Adds the input node to the

inputs vector, establishing the connection in the computational graph.

- Determines if gradient tracking is required based on the input tensor's

requiresGrad property.

- Initializes the

output tensor with the same shape as the input tensor and appropriate gradient tracking.

- Sets the node type to "Softmax" for identification in the computational graph.

- Note

- The Softmax function normalizes the input to a probability distribution over predicted output classes.

- This constructor only sets up the node structure; the actual Softmax computation is performed in the forward pass.

- Gradient tracking for the output tensor is automatically set based on the input tensor's requirements.

- The

sum variable initialized here may be used for optimizations in the forward or backward passes.

- See also

- forward() for the implementation of the Softmax computation in the forward pass.

-

backward() for the gradient computation in the backward pass.

- Author

- Mgepahmge (https://github.com/Mgepahmge)

- Date

- 2023/12/06

Definition at line 524 of file Nodes.cu.

| void nz::nodes::calc::SoftmaxNode::backward |

( |

| ) |

|

|

overridevirtual |

Performs the backward pass of the Softmax operation.

This method implements the gradient computation for the Softmax activation function. It calculates the Jacobian matrix of the Softmax function and then uses it to compute the gradient with respect to the input.

The backward pass is implemented in two main steps:

- Calculation of the Softmax Jacobian:

- Computes the Jacobian matrix for the Softmax function using CUDA parallelization.

- Gradient computation:

- Performs matrix multiplication between the Jacobian and the output gradient to obtain the input gradient.

The Jacobian of the Softmax function is defined as:

J_ij = softmax_i * (δ_ij - softmax_j)

where δ_ij is the Kronecker delta.

Key operations:

- Initialization of the Jacobian tensor.

- CUDA kernel setup for parallel computation of the Jacobian.

- Execution of the SoftmaxJacobian CUDA kernel to compute the Jacobian matrix.

- CUDA kernel setup for matrix multiplication.

- Execution of the GeneralMatrixMul CUDA kernel to compute the final gradient.

- Note

- This implementation utilizes CUDA for efficient parallel computation on GPU.

- The Jacobian computation and matrix multiplication are performed entirely on the GPU.

- The method assumes that the output gradient (output->grad()) has already been set.

- The computed gradient is stored in the input node's gradient (inputs[0]->output->grad()).

- See also

- forward() for the corresponding forward pass implementation.

- Author

- Mgepahmge (https://github.com/Mgepahmge)

- Date

- 2023/12/06

Implements nz::nodes::Node.

Definition at line 538 of file Nodes.cu.

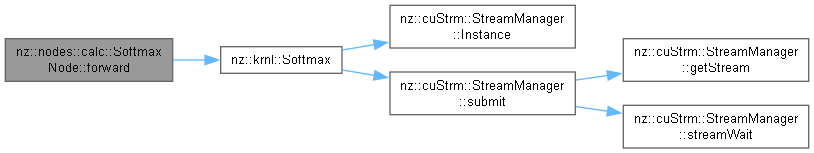

| void nz::nodes::calc::SoftmaxNode::forward |

( |

| ) |

|

|

overridevirtual |

Performs the forward pass of the Softmax operation.

This method implements the forward computation for the Softmax activation function. It calculates the exponential sum of the input elements and then applies the Softmax function to each element.

The forward pass is implemented in two main steps:

- Calculation of the sum of exponentials:

- Uses CUDA parallelization to compute exp(x) for each input element.

- Accumulates these exponentials to get the sum for normalization.

- Application of the Softmax function:

- Computes exp(x_i) / sum(exp(x_j)) for each element using CUDA.

The Softmax function is defined as:

Softmax(x_i) = exp(x_i) / sum(exp(x_j))

where x_i is the i-th element of the input vector and the sum is over all elements j.

Key operations:

- CUDA kernel setup for parallel computation.

- Memory allocation and management for intermediate results.

- Execution of the SummationExp CUDA kernel for exponential sum calculation.

- Data transfer between GPU and CPU for sum accumulation.

- Execution of the Softmax CUDA kernel for final output computation.

- Note

- This implementation utilizes CUDA for efficient parallel computation on GPU.

- The method handles both the exponential sum calculation and the final Softmax normalization.

- Temporary memory is allocated and freed for intermediate calculations.

- The final output is stored in the node's output tensor.

- See also

- Softmax CUDA kernel for the implementation of the final Softmax computation.

-

backward() for the corresponding backward pass implementation.

- Author

- Mgepahmge (https://github.com/Mgepahmge)

- Date

- 2023/12/06

Implements nz::nodes::Node.

Definition at line 534 of file Nodes.cu.

Public Member Functions inherited from nz::nodes::Node

Public Member Functions inherited from nz::nodes::Node