|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

Represents a hyperbolic tangent (tanh) activation function node in a computational graph. More...

Public Member Functions | |

| TanhNode (Node *input) | |

Constructor to initialize a TanhNode for applying the tanh activation function. | |

| void | forward () override |

Forward pass for the TanhNode to apply the tanh activation function. | |

| void | backward () override |

Backward pass for the TanhNode to compute gradients. | |

Public Member Functions inherited from nz::nodes::Node Public Member Functions inherited from nz::nodes::Node | |

| virtual void | print (std::ostream &os) const |

| Prints the type, data, and gradient of the node. | |

| void | dataInject (Tensor::value_type *data, bool grad=false) const |

| Injects data into a relevant tensor object, optionally setting its gradient requirement. | |

| template<typename Iterator > | |

| void | dataInject (Iterator begin, Iterator end, const bool grad=false) const |

| Injects data from an iterator range into the output tensor of the InputNode, optionally setting its gradient requirement. | |

| void | dataInject (const std::initializer_list< Tensor::value_type > &data, bool grad=false) const |

| Injects data from a std::initializer_list into the output tensor of the Node, optionally setting its gradient requirement. | |

Represents a hyperbolic tangent (tanh) activation function node in a computational graph.

The TanhNode class applies the hyperbolic tangent (tanh) activation function to the input tensor. The tanh function is defined as:

It maps input values to the range (-1, 1) and is commonly used in neural networks for non-linear activation.

Key features:

This class is part of the nz::nodes namespace and is commonly used to add non-linearity to models or normalize outputs.

|

explicit |

Constructor to initialize a TanhNode for applying the tanh activation function.

The constructor initializes a TanhNode, which applies the hyperbolic tangent (tanh) activation function to an input tensor. It establishes a connection to the input node, initializes the output tensor, and sets the type of the node to "Tanh".

| input | A pointer to the input node. Its output tensor will have the tanh activation applied. |

inputs vector to establish the connection in the computational graph.output tensor is initialized with the same shape as the input tensor, and its gradient tracking is determined based on the input tensor's requirements.

|

overridevirtual |

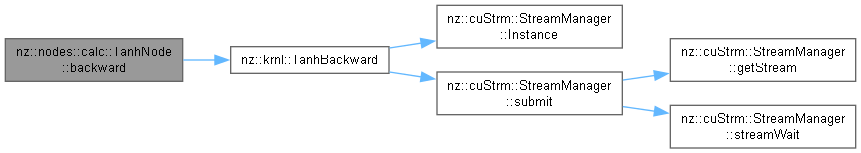

Backward pass for the TanhNode to compute gradients.

The backward() method computes the gradient of the loss with respect to the input tensor by applying the derivative of the tanh activation function. The gradient is propagated using the formula:

TanhBackward) is launched to compute the gradients in parallel on the GPU.output tensor's data and combined with the gradient of the output tensor to compute the input gradient.requiresGrad property is true.Implements nz::nodes::Node.

Definition at line 400 of file Nodes.cu.

|

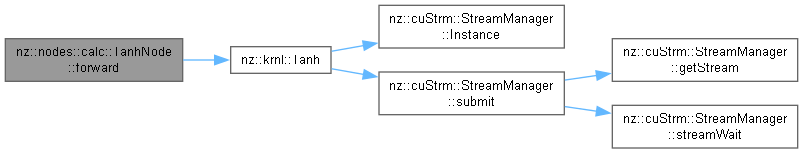

overridevirtual |

Forward pass for the TanhNode to apply the tanh activation function.

The forward() method applies the hyperbolic tangent (tanh) activation function element-wise to the input tensor. The result is stored in the output tensor. The tanh function is defined as:

It maps input values to the range (-1, 1).

Tanh) is launched to compute the activation function in parallel on the GPU.output tensor to optimize GPU utilization.output tensor for use in subsequent layers or operations.Implements nz::nodes::Node.

Definition at line 394 of file Nodes.cu.