|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

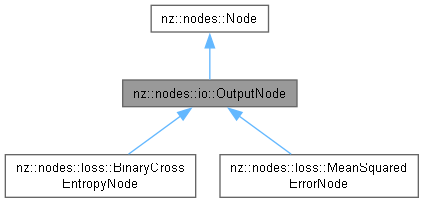

Base class for loss function nodes in a computational graph. More...

Public Member Functions | |

| OutputNode (Node *input) | |

Constructor to initialize an OutputNode with a given input node. | |

| void | forward () override |

Forward pass for the OutputNode. | |

| void | backward () override |

Backward pass for the OutputNode. | |

| Tensor::value_type | getLoss () const |

Retrieves the loss value stored in the OutputNode. | |

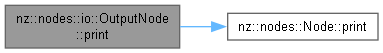

| void | print (std::ostream &os) const override |

| Prints the type, data, gradient, and loss of the node. | |

Public Member Functions inherited from nz::nodes::Node Public Member Functions inherited from nz::nodes::Node | |

| void | dataInject (Tensor::value_type *data, bool grad=false) const |

| Injects data into a relevant tensor object, optionally setting its gradient requirement. | |

| template<typename Iterator > | |

| void | dataInject (Iterator begin, Iterator end, const bool grad=false) const |

| Injects data from an iterator range into the output tensor of the InputNode, optionally setting its gradient requirement. | |

| void | dataInject (const std::initializer_list< Tensor::value_type > &data, bool grad=false) const |

| Injects data from a std::initializer_list into the output tensor of the Node, optionally setting its gradient requirement. | |

Base class for loss function nodes in a computational graph.

The OutputNode class serves as the base class for all nodes representing loss functions in a neural network. It connects to the output of a node that produces the final result, and it computes the loss based on that result. During the forward pass, it simply copies the output of the input node, and during the backward pass, it sets the gradient of the output tensor to 1, effectively marking the end of the gradient flow.

The OutputNode class is used as a parent class for more specific loss function nodes (such as Mean Squared Error or Cross-Entropy loss), which can further extend its functionality to compute the actual loss and update the loss member.

Key features:

loss member variable holds the value of the computed loss. Specific loss functions can update this value by extending the OutputNode class.forward() method simply sets the output member to the output of the input node.backward() method sets the gradient of the output tensor to 1, which marks the start of gradient propagation for the backward pass.getLoss() method provides access to the loss value stored in the loss member.This class is part of the nz::nodes namespace, and it is designed to be extended for implementing various loss functions.

OutputNode class does not perform any specific loss computation. It is intended to be a base class for more specific loss function nodes that compute and track the actual loss.

|

explicit |

Constructor to initialize an OutputNode with a given input node.

This constructor initializes an OutputNode by accepting an input node. The output of this node will be set to the output of the provided input node during the forward pass. The loss is initialized to 0, and the type is set to "Output".

The OutputNode class is designed to represent the output layer of a neural network, and it serves as the base class for loss function nodes. The forward() and backward() methods will be responsible for propagating data and gradients, respectively.

| input | A pointer to the Node that serves as the input to the OutputNode. The output of this node will be used as the OutputNode's output. |

This constructor sets up the node with a reference to its input, allowing the OutputNode to pass data from its input node and compute the loss during the forward and backward passes.

InputNode or any other node that provides the final output of the network can be passed to this constructor.loss member is initialized to 0 and can be updated by specific loss function implementations in derived classes.

|

overridevirtual |

Backward pass for the OutputNode.

The backward() method for the OutputNode sets the gradient of the output tensor to 1. If the input tensor of the OutputNode requires gradients (i.e., it is part of the model parameters), the gradient of the input tensor is set to 1. This is a standard operation in the backward pass for the output layer, as it marks the start of the gradient propagation in the network.

This method does not perform any gradient calculations for the output node itself. Instead, it ensures that the gradient of the input node’s output is set to 1, which is necessary for the backpropagation process in the neural network.

backward() method simply fills the gradient of the input tensor with 1. This is because the OutputNode represents the output layer, where the gradient is typically set to 1 as the starting point of backpropagation.output is available for further propagation through the network during the backward pass.Implements nz::nodes::Node.

|

overridevirtual |

Forward pass for the OutputNode.

The forward() method for the OutputNode sets the output member of the node to be the same as the output of its input node. This effectively passes the output from the input node to the OutputNode without any modification. Since the OutputNode does not perform any computation itself, it simply relays the input node's output during the forward pass, making it equivalent to its input node's output.

This method is typically used in the context of a neural network, where the OutputNode represents the final layer, and it connects the output of the network to the loss function for loss computation and backpropagation.

forward() method does not alter the data from the input node; it merely sets the output of the OutputNode to be the same as the input node's output.OutputNode class to conform to the interface defined by its base class Node.Implements nz::nodes::Node.

|

nodiscard |

Retrieves the loss value stored in the OutputNode.

The getLoss() method returns the value of the loss that is stored in the loss member of the OutputNode. This value is typically updated by a derived class (e.g., a specific loss function class like Mean Squared Error or Cross-Entropy Loss) during the forward pass. The loss represents the discrepancy between the predicted output and the actual target output in the context of a neural network.

The getLoss() function provides access to the computed loss value, which is essential for monitoring the network’s performance during training and optimization.

loss member, which is of type Tensor::value_type.MeanSquaredErrorNode or BinaryCrossEntropyNode.

|

overridevirtual |

Prints the type, data, gradient, and loss of the node.

The print() method outputs the information about the node, including its type, the tensor data stored in the node's output, the corresponding gradient, and the loss value (if available). This is useful for debugging and inspecting the state of nodes in a computational graph or during training, allowing for easy visualization of the node's content, gradients, and any associated loss.

The method outputs the following details:

output tensor.This method is primarily used for debugging and monitoring the state of tensors, gradients, and loss, making it easier to inspect how the data, gradients, and error values flow through the network.

output tensor should contain both the data and the gradient information, and both are printed when this method is called.loss value will only be printed if it is associated with the node. If the node does not have a loss value, this field may be omitted.| os | The output stream (e.g., std::cout) to which the node's information will be printed. |

Reimplemented from nz::nodes::Node.

Definition at line 72 of file Nodes.cu.