|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

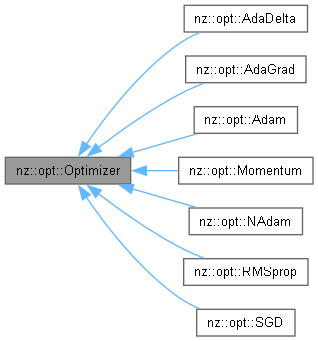

Base class for optimization algorithms in deep learning. More...

Public Member Functions | |

| Optimizer ()=default | |

| Default constructor for the Optimizer class. | |

| virtual | ~Optimizer ()=default |

| Default destructor for the Optimizer class. | |

| virtual void | step (Node *input)=0 |

| Pure virtual function for performing a single optimization step. | |

Base class for optimization algorithms in deep learning.

The Optimizer class serves as the base class for all optimization algorithms used in training deep learning models. It defines the common interface that all optimizer classes must implement, including the step function, which updates the model parameters (or nodes) during training based on the optimizer's specific strategy.

The Optimizer class contains a protected member:

This class is intended to be subclassed by various optimization algorithms, such as SGD, Adam, and AdaGrad. Each subclass is required to implement the step function, which is responsible for updating the model parameters according to the specific optimization method being used.

step function should be called after calculating the gradients for a given input.step function.learning_rate is typically set during the initialization of an optimizer and is used to control the size of the updates applied to model parameters.This class is part of the nz::opt namespace and provides a common structure for implementing various optimizers, facilitating extensibility and code reuse.

Definition at line 125 of file Optimizer.cuh.

|

explicitdefault |

Default constructor for the Optimizer class.

This is the default constructor for the Optimizer class. It initializes the base class and sets the learning_rate to its default value. This constructor does not perform any specific initialization, as it is intended to be used in subclasses where additional initialization might occur.

learning_rate is intended to be set by the derived classes during their initialization.Optimizer for the base class and other methods.

|

virtualdefault |

Default destructor for the Optimizer class.

This is the default destructor for the Optimizer class. It ensures proper cleanup of any resources acquired by the class. Since this is a base class, the destructor is virtual to ensure that the destructors of derived classes are called correctly when an object is deleted through a base class pointer.

Optimizer for the base class and other methods.

|

pure virtual |

Pure virtual function for performing a single optimization step.

This is a pure virtual function that must be overridden by derived optimizer classes. The step function is responsible for updating the model parameters (or nodes) based on the optimization algorithm's rules. It takes a Node pointer as input, representing the parameters that will be modified in the optimization process.

The implementation of this function varies depending on the specific optimization algorithm (e.g., SGD, Adam, Momentum, etc.), but the common goal is to update the model parameters in the direction that minimizes the loss function.

| input | A pointer to a Node object representing the model's parameters that will be updated during the optimization step. |

Optimizer for the optimization process to work.Node class is expected to represent model parameters and should support necessary operations for optimization, such as gradient updates.SGD, Adam, Momentum, etc., for specific implementations of this method.Implemented in nz::opt::AdaDelta, nz::opt::AdaGrad, nz::opt::Adam, nz::opt::Momentum, nz::opt::NAdam, nz::opt::RMSprop, and nz::opt::SGD.