|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

AdaDelta optimizer for deep learning models. More...

Public Member Functions | |

| AdaDelta (Tensor::value_type rho) | |

| Constructs an AdaDelta optimizer with a specified decay rate. | |

| void | step (Node *input) override |

| Performs a single optimization step using the AdaDelta algorithm. | |

Public Member Functions inherited from nz::opt::Optimizer Public Member Functions inherited from nz::opt::Optimizer | |

| Optimizer ()=default | |

| Default constructor for the Optimizer class. | |

| virtual | ~Optimizer ()=default |

| Default destructor for the Optimizer class. | |

AdaDelta optimizer for deep learning models.

The AdaDelta class implements the AdaDelta optimization algorithm, which is a variant of the Adagrad optimizer that seeks to reduce its aggressive, monotonically decreasing learning rate. Instead of accumulating all past squared gradients, AdaDelta restricts the window of accumulation to a fixed size, allowing for more robust updates and addressing the diminishing learning rate problem.

This class extends the Optimizer base class and provides a concrete implementation of the step method, which updates the model's parameters (represented as Node objects) using the AdaDelta algorithm.

Node):Node objects, and each node must have associated gradients.acc_grad and acc_delta) are stored per Node object. If a Node does not have existing accumulators, they are initialized to zero tensors.Definition at line 987 of file Optimizer.cuh.

|

explicit |

Constructs an AdaDelta optimizer with a specified decay rate.

Initializes the AdaDelta optimization algorithm with a given decay rate (rho). AdaDelta is an adaptive learning rate method that automatically adjusts the learning rate for each parameter, addressing some limitations of traditional stochastic gradient descent methods.

Unlike other adaptive optimization algorithms, AdaDelta does not require an explicit learning rate. Instead, it uses a running average of squared gradients and squared parameter updates to scale the optimization step dynamically.

| rho | The decay rate that controls the moving window for accumulating gradient statistics. This parameter determines how quickly the algorithm forgets past gradient information. Typically set between 0.9 and 0.999. |

rho parameter is analogous to the momentum decay rates in other adaptive optimization algorithms.Definition at line 136 of file Optimizer.cu.

|

overridevirtual |

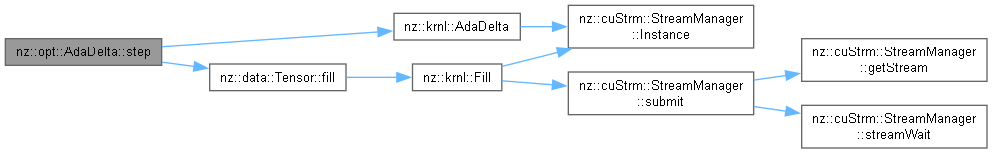

Performs a single optimization step using the AdaDelta algorithm.

This method updates the model parameters for a given input node using the AdaDelta optimization algorithm. It manages adaptive learning rates by maintaining running accumulators for both gradient and parameter update magnitudes.

The method performs several key operations:

The lazy initialization of accumulators ensures that each parameter has its own adaptive learning rate, allowing for more flexible and efficient optimization across different model parameters.

| input | A pointer to the Node object representing the model parameter to be updated. The node must have a valid output tensor and its gradient already computed. |

Implements nz::opt::Optimizer.

Definition at line 140 of file Optimizer.cu.