|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

Adam optimizer for deep learning models. More...

Public Member Functions | |

| Adam (Tensor::value_type learning_rate, Tensor::value_type beta1, Tensor::value_type beta2) | |

| Constructs an Adam optimizer with the specified hyperparameters. | |

| void | step (Node *input) override |

| Performs a single optimization step using the Adam algorithm. | |

Public Member Functions inherited from nz::opt::Optimizer Public Member Functions inherited from nz::opt::Optimizer | |

| Optimizer ()=default | |

| Default constructor for the Optimizer class. | |

| virtual | ~Optimizer ()=default |

| Default destructor for the Optimizer class. | |

Adam optimizer for deep learning models.

The Adam class implements the Adam optimization algorithm, which is an adaptive learning rate optimization method designed for training deep learning models. Adam combines the advantages of two popular optimization techniques: Adaptive Gradient Algorithm (AdaGrad) and Root Mean Square Propagation (RMSProp). It uses estimates of first and second moments of gradients to adaptively adjust the learning rate for each parameter.

This class extends the Optimizer base class and provides a concrete implementation of the step method, which updates the model's parameters (represented as Node objects) using the Adam algorithm.

Node):Node objects, and each node must have associated gradients.m and v) are stored per Node object. If a Node does not have existing moments, they are initialized to zero tensors.Definition at line 707 of file Optimizer.cuh.

|

explicit |

Constructs an Adam optimizer with the specified hyperparameters.

The Adam constructor initializes an instance of the Adam optimizer with the given learning rate, beta1, and beta2 values. These hyperparameters control the behavior of the Adam optimization algorithm:

The constructor also initializes the internal iteration counter (it) to zero, which is used for bias correction during the parameter updates.

| learning_rate | The learning rate (( \eta )) used for parameter updates. It controls the step size. |

| beta1 | The exponential decay rate for the first moment estimate (( \beta_1 )). Typical values are in the range [0.9, 0.99]. |

| beta2 | The exponential decay rate for the second moment estimate (( \beta_2 )). Typical values are in the range [0.99, 0.999]. |

Definition at line 79 of file Optimizer.cu.

|

overridevirtual |

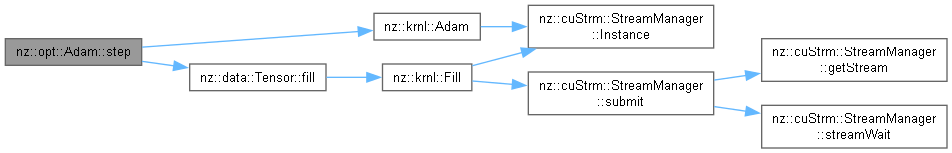

Performs a single optimization step using the Adam algorithm.

The step method updates the model parameters based on the gradients computed during the forward pass. It applies the Adam optimization algorithm, which uses moving averages of the gradients and their squared values to adaptively adjust the learning rate for each parameter. This helps achieve stable and efficient parameter updates.

The method performs the following steps:

it), which is used for bias correction.m) and second moment estimate (v) for the given input node exist. If not, it initializes them to zero tensors with the same shape as the node's output.m), and their squared values (v), along with the specified hyperparameters (learning rate, beta1, beta2, epsilon).This method is designed to be used with a model parameter represented as a Node object and assumes that the node has an associated output tensor and gradient.

| input | A pointer to the Node object representing the model parameter to be updated. The node should have an output tensor and its gradient already computed. |

input node has a valid gradient stored in its output object.m) and second moment estimate (v) are maintained for each node individually.epsilon value is used to prevent division by zero during the parameter update.Implements nz::opt::Optimizer.

Definition at line 86 of file Optimizer.cu.