|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

AdaGrad optimizer for deep learning models. More...

Public Member Functions | |

| AdaGrad (Tensor::value_type learning_rate) | |

| Constructs an AdaGrad optimizer with the specified learning rate. | |

| void | step (Node *input) override |

| Performs a single optimization step using the AdaGrad algorithm. | |

Public Member Functions inherited from nz::opt::Optimizer Public Member Functions inherited from nz::opt::Optimizer | |

| Optimizer ()=default | |

| Default constructor for the Optimizer class. | |

| virtual | ~Optimizer ()=default |

| Default destructor for the Optimizer class. | |

AdaGrad optimizer for deep learning models.

The AdaGrad class implements the Adaptive Gradient algorithm, which is a popular optimization method that adapts the learning rate for each parameter based on the historical gradients. AdaGrad is known for its ability to handle sparse gradients and adjust learning rates during training.

This class extends the Optimizer base class and provides a concrete implementation of the step method, which updates the model's parameters using the AdaGrad algorithm.

Node objects, and these nodes must have associated gradients for the optimizer to function correctly.gss map stores the sum of squared gradients for each parameter, which is used to adjust the learning rate.epsilon term ensures numerical stability when dividing by the sum of squared gradients.Definition at line 458 of file Optimizer.cuh.

|

explicit |

Constructs an AdaGrad optimizer with the specified learning rate.

This constructor initializes the AdaGrad optimizer with the given learning rate, which is used to control the magnitude of the updates during training. The learning rate determines how much to adjust the model's parameters in response to the computed gradients.

| learning_rate | The learning rate to be used for parameter updates. It is a scalar value that controls the size of the steps taken during the optimization process. A smaller value makes the updates more conservative, while a larger value can speed up convergence but may cause instability. |

epsilon value used in the AdaGrad algorithm is set to a default of 1e-6 for numerical stability during updates and is not modified by this constructor.Node objects, and the gradients for these nodes will be updated during the step method.Definition at line 46 of file Optimizer.cu.

|

overridevirtual |

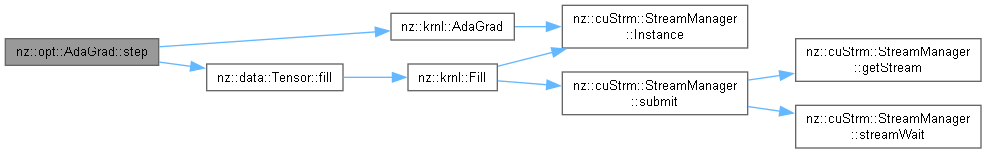

Performs a single optimization step using the AdaGrad algorithm.

The step function updates the model parameters represented by the Node object using the AdaGrad optimization algorithm. AdaGrad adapts the learning rate for each parameter by considering the history of gradients, providing faster convergence for sparse gradients.

This method performs the following steps:

Node) if it has not been initialized.| input | A pointer to the Node object representing the model parameters. This object should have gradients stored in its output attribute, which will be used to update the parameters. |

Node object is assumed to have a valid output tensor with its gradients already computed.gss map stores the sum of squared gradients for each parameter, ensuring that the learning rate adapts to the frequency of gradient updates.epsilon term is used to avoid division by zero and ensure numerical stability when updating the parameters.Implements nz::opt::Optimizer.

Definition at line 50 of file Optimizer.cu.