|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

Stochastic Gradient Descent (SGD) optimizer for deep learning models. More...

Public Member Functions | |

| SGD (Tensor::value_type learning_rate) | |

| Constructor for the SGD optimizer. | |

| void | step (Node *input) override |

| Performs a single step of the Stochastic Gradient Descent (SGD) optimization. | |

Public Member Functions inherited from nz::opt::Optimizer Public Member Functions inherited from nz::opt::Optimizer | |

| Optimizer ()=default | |

| Default constructor for the Optimizer class. | |

| virtual | ~Optimizer ()=default |

| Default destructor for the Optimizer class. | |

Stochastic Gradient Descent (SGD) optimizer for deep learning models.

The SGD class implements the Stochastic Gradient Descent optimization algorithm, which is one of the most basic and widely-used methods for optimizing deep learning model parameters. The algorithm updates the model's parameters by moving in the direction of the negative gradient scaled by a learning rate.

This class extends the Optimizer base class and provides a concrete implementation of the step method, which updates the parameters of the model (represented as Node objects) using the SGD algorithm.

Node objects, and these nodes must have associated gradients for the optimizer to function correctly.Definition at line 250 of file Optimizer.cuh.

|

explicit |

Constructor for the SGD optimizer.

This constructor initializes the SGD optimizer with a specified learning rate. The learning rate is a crucial hyperparameter that determines the step size for each parameter update during training. A smaller learning rate leads to smaller updates, while a larger learning rate results in faster convergence but may risk overshooting the optimal solution.

| learning_rate | The learning rate to be used in the optimization process. It defines the magnitude of the updates to the model parameters. |

Definition at line 10 of file Optimizer.cu.

|

overridevirtual |

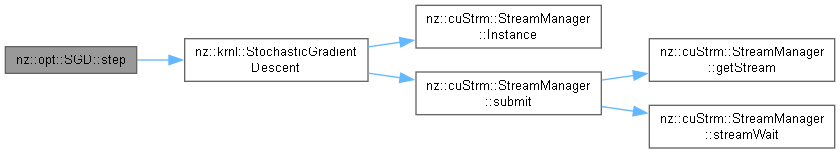

Performs a single step of the Stochastic Gradient Descent (SGD) optimization.

This method updates the model parameters (represented by Node objects) using the Stochastic Gradient Descent algorithm. The parameters are updated based on the gradients computed during the backward pass, and the updates are scaled by the learning rate. The method uses CUDA to parallelize the parameter updates on the GPU, ensuring high performance for large-scale models.

The update process involves computing the negative gradient and scaling it by the learning rate to adjust the model parameters. This method is intended to be called during the training loop to update the parameters at each iteration.

| input | The Node object that holds the model parameters and their gradients. This node must have a valid gradient computed during the backward pass. |

input node contains a valid output tensor with computed gradients.Implements nz::opt::Optimizer.

Definition at line 14 of file Optimizer.cu.