|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

RMSprop optimizer for deep learning models. More...

Public Member Functions | |

| RMSprop (Tensor::value_type learning_rate, Tensor::value_type decay_rate) | |

| Constructs an RMSprop optimizer with specified learning rate and decay rate. | |

| void | step (Node *input) override |

| Performs a single optimization step using the RMSprop algorithm. | |

Public Member Functions inherited from nz::opt::Optimizer Public Member Functions inherited from nz::opt::Optimizer | |

| Optimizer ()=default | |

| Default constructor for the Optimizer class. | |

| virtual | ~Optimizer ()=default |

| Default destructor for the Optimizer class. | |

RMSprop optimizer for deep learning models.

The RMSprop class implements the RMSprop (Root Mean Square Propagation) optimization algorithm. RMSprop is designed to address the diminishing learning rate issue of AdaGrad by introducing a moving average of squared gradients. This helps stabilize the learning rate, making it suitable for non-stationary or dynamically changing loss functions.

This class extends the Optimizer base class and provides a concrete implementation of the step method, which updates the model's parameters (represented as Node objects) using the RMSprop algorithm.

![\[

v_t = \beta v_{t-1} + (1 - \beta) g_t^2

\]](form_1.png)

![\[

\theta_t = \theta_{t-1} - \frac{\eta}{\sqrt{v_t + \epsilon}} g_t

\]](form_2.png)

Node objects, and these nodes have gradients computed before calling the step method.v map stores the moving average of squared gradients for each parameter.epsilon term helps avoid division by zero and ensures numerical stability.Definition at line 577 of file Optimizer.cuh.

|

explicit |

Constructs an RMSprop optimizer with specified learning rate and decay rate.

The constructor initializes the RMSprop optimizer with the provided learning rate and decay rate. RMSprop is an adaptive learning rate optimization algorithm that maintains a moving average of squared gradients to scale the learning rate for each parameter individually.

This constructor sets the initial values for:

learning_rate: The step size used for parameter updates.decay_rate: The factor used to update the moving average of squared gradients, which controls how much the previous gradients influence the current update.| learning_rate | The learning rate used in the RMSprop algorithm to scale the gradient updates. |

| decay_rate | The decay rate (also called momentum term) used to compute the moving average of squared gradients. A higher value gives more weight to previous gradients, while a lower value emphasizes recent gradients. |

1e-6) is used to avoid division by zero during parameter updates.step method to perform optimization steps.Definition at line 62 of file Optimizer.cu.

|

overridevirtual |

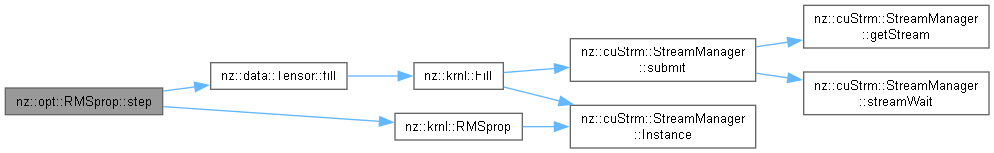

Performs a single optimization step using the RMSprop algorithm.

The step method updates the model parameters based on the gradients computed during the forward pass. It applies the RMSprop optimization algorithm, which uses a moving average of the squared gradients to adjust the learning rate for each parameter. This helps to maintain a stable and adaptive learning rate, preventing the gradient from becoming too large or too small during training.

The method checks if the squared gradient cache (v) for the given input node exists. If not, it initializes it to zero. Then, it applies the RMSprop update rule using the current gradient, the moving average of squared gradients, and the specified learning rate and decay rate.

This method is designed to be used with a model parameter represented as a Node object and assumes that the node has an associated output and gradient.

| input | A pointer to the Node object representing the model parameter to be updated. The node should have an output tensor and its gradient already computed. |

input node has a valid gradient stored in its output object.v) is maintained for each node individually.Implements nz::opt::Optimizer.

Definition at line 67 of file Optimizer.cu.