|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

Base class for constructing neural network models with automatic computation graph management. More...

Public Member Functions | |

| Model () | |

| Default constructs Model instance with empty computation graph. | |

| ~Model () | |

| Safely destructs Model and associated computation nodes. | |

| Tensor & | forward () |

| Executes full forward propagation through computation graph. | |

| void | backward () |

| Performs backward propagation and gradient accumulation. | |

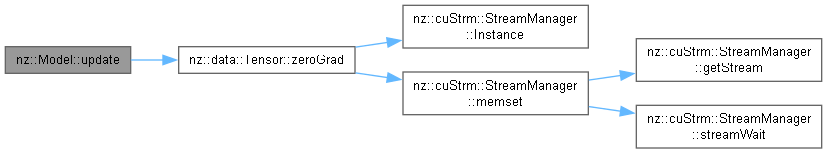

| void | update (opt::Optimizer *optimizer) const |

| Applies parameter updates using attached optimization strategy. | |

| Tensor::value_type | getLoss () const |

| Retrieves scalar loss value from last forward pass. | |

Protected Member Functions | |

| Node * | Add (Node *lhs, Node *rhs) |

| Creates addition operation node in computation graph (Low-level API) | |

| Node * | Sub (Node *lhs, Node *rhs) |

| Creates subtraction operation node in computation graph (Low-level API) | |

| Node * | Mul (Node *lhs, Node *rhs) |

| Creates matrix multiplication node in computation graph (Low-level API) | |

| Node * | Bias (Node *input) |

| Creates trainable bias parameter and adds element-wise to input (Mid-level API) | |

| Node * | Reshape (Node *input, const Tensor::shape_type &shape) |

| Modifies tensor dimensions while preserving data (Low-level API) | |

| Node * | Linear (Node *input, size_t outSize) |

| Implements fully-connected layer transformation (Top-level API) | |

| Node * | ReLU (Node *input) |

| Applies Rectified Linear Unit activation (Mid-level API) | |

| Node * | Sigmoid (Node *input) |

| Applies logistic sigmoid activation (Mid-level API) | |

| Node * | Tanh (Node *input) |

| Applies hyperbolic tangent activation (Mid-level API) | |

| Node * | LeakyReLU (Node *input, float alpha=0.01f) |

| Applies Leaky Rectified Linear Unit activation (Mid-level API) | |

| Node * | Swish (Node *input) |

| Applies self-gated swish activation (Mid-level API) | |

| Node * | ELU (Node *input, float alpha=1.0f) |

| Applies Exponential Linear Unit activation (Mid-level API) | |

| Node * | HardSigmoid (Node *input, float alpha=0.2f, float beta=0.5f) |

| Applies piecewise linear sigmoid approximation (Mid-level API) | |

| Node * | HardSwish (Node *input, float alpha=0.2f, float beta=0.5f) |

| Applies hardware-efficient swish activation (Mid-level API) | |

| Node * | Softmax (Node *input) |

| Applies channel-wise probability normalization (High-level API) | |

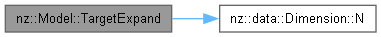

| Node * | TargetExpand (Node *input, const Tensor::shape_type &shape) |

| (Low-level) Batch expansion primitive for singleton tensors | |

| Node * | Img2Col (Node *input, Tensor::size_type kernelHeight, Tensor::size_type kernelWidth, Tensor::size_type stride, Tensor::size_type padding) |

| (Low-level) Image-to-column transformation primitive | |

| Node * | Col2Img (Node *input, Tensor::size_type outputHeight, Tensor::size_type outputWidth) |

| (Low-level) Column-to-image transformation primitive | |

| Node * | Conv2d (Node *input, Tensor::size_type outChannels, Tensor::size_type kernelHeight, Tensor::size_type kernelWidth, Tensor::size_type stride, Tensor::size_type padding, bool bias=true) |

| Executes optimized convolution using img2col acceleration (High-level API) | |

| Node * | AvgPool2d (Node *input, Tensor::size_type poolSize, Tensor::size_type stride, Tensor::size_type padding=0) |

| Performs 2D average pooling operation (Sliding window) | |

| Node * | GlobalAvgPool2d (Node *input) |

| Computes global average pooling over spatial dimensions. | |

| Node * | MaxPool2d (Node *input, Tensor::size_type poolSize, Tensor::size_type stride, Tensor::size_type padding=0) |

| Performs 2D maximum pooling operation. | |

| Node * | GlobalMaxPool2d (Node *input) |

| Computes global maximum pooling over spatial axes. | |

| void | MSELoss (Node *input, Node *target) |

| Establishes Mean Squared Error loss node as computational graph terminal. | |

| void | BCELoss (Node *input, Node *target) |

| Configures Binary Cross-Entropy loss as computation graph endpoint. | |

| void | defaultOutput (Node *input) |

| Provides zero-overhead tensor passthrough for inference outputs. | |

Related Symbols | |

(Note that these are not member symbols.) | |

| std::ostream & | operator<< (std::ostream &os, Model &model) |

| Serializes neural network computation graph structure to output stream. | |

Base class for constructing neural network models with automatic computation graph management.

Provides infrastructure for building trainable models through composition of computational nodes. Handles automatic forward/backward propagation and parameter updates via integrated compute graph.

Derive custom model class with public inheritance from Model

Declare and initialize input nodes with tensor dimensions. Two initialization methods:

Build network in subclass constructor with layer composition pattern:

Standard three-phase training pattern with optimizer integration:

&input)The following table summarizes key components supported by the Model class:

| Component | Brief Description |

|---|---|

| Add | Performs element-wise addition between two nodes |

| Sub | Computes element-wise subtraction between two nodes |

| Mul | Executes element-wise multiplication of two nodes |

| Bias | Applies learnable bias term to input tensor |

| Reshape | Modifies tensor dimensions without changing data |

| Linear | Implements fully-connected layer transformation |

| ReLU | Applies Rectified Linear Unit activation |

| Sigmoid | Computes logistic sigmoid activation |

| Tanh | Applies hyperbolic tangent activation |

| LeakyReLU | Leaky variant of ReLU with configurable negative slope |

| Swish | Computes self-gated activation (x * sigmoid(x)) |

| ELU | Exponential Linear Unit activation |

| HardSigmoid | Piecewise linear approximation of sigmoid |

| HardSwish | Hardware-friendly Swish variant with linear approximation |

| Softmax | Applies channel-wise softmax normalization |

| TargetExpand | Broadcasts target tensor dimensions to match input shape |

| Img2Col | Converts image tensor to column-major format for convolution optimization |

| Col2Img | Reconstructs image tensor from column-major representation |

| Conv2d | 2D convolution layer with configurable kernel/padding |

| AvgPool2d | Spatial average pooling operation |

| GlobalAvgPool2d | Global spatial averaging across feature maps |

| MaxPool2d | Spatial max pooling operation |

| GlobalMaxPool2d | Global spatial maximum pooling |

| MSELoss | Configures mean squared error as graph terminal node |

| BCELoss | Sets binary cross-entropy loss with implicit sigmoid |

| defaultOutput | Passthrough output node for inference-only models |

|

default |

Default constructs Model instance with empty computation graph.

Creates valid Model object in initial state:

| nz::Model::~Model | ( | ) |

Safely destructs Model and associated computation nodes.

Performs complete resource cleanup:

Creates addition operation node in computation graph (Low-level API)

| lhs | Left operand node (device-to-device, non-owning) |

| rhs | Right operand node (device-to-device, non-owning) |

@complexity O(1) node creation + O(α(n)) graph insertion

|

protected |

Performs 2D average pooling operation (Sliding window)

| input | 4D tensor node (device-to-device, non-owning, shape [N,C,H,W]) |

| poolSize | Spatial extent of pooling (device-to-device, K ≥ 1) |

| stride | Step size for window movement (device-to-device, S ≥ 1) |

| padding | Input padding size (device-to-device, P ≥ 0) |

@complexity O(N·C·H_out·W_out·K²) computational operations

| void nz::Model::backward | ( | ) |

Performs backward propagation and gradient accumulation.

Configures Binary Cross-Entropy loss as computation graph endpoint.

| input | Logits tensor node (device-to-device, non-owning, shape [N,*]) |

| target | Binary labels tensor node (device-to-device, non-owning, shape [N,*]) |

ℒ_BCE = - (1/K) * ∑_{i=1}^K [ target_i·log(σ(input_i)) + (1-target_i)·log(1-σ(input_i)) ] Where σ denotes sigmoid activation

@complexity O(K) logarithmic operations + 3K element-wise operations

Creates trainable bias parameter and adds element-wise to input (Mid-level API)

| input | Feature map node (device-to-device, non-owning) |

@complexity O(1) parameter creation + O(1) graph insertion

|

protected |

(Low-level) Column-to-image transformation primitive

| input | Column-formatted node (device-to-device, non-owning, shape [N,1,H_out×W_out,C_out]) |

| outputHeight | Original spatial height (device-to-device, H ∈ ℕ+) |

| outputWidth | Original spatial width (device-to-device, W ∈ ℕ+) |

Performs inverse operation of Img2Col by:

@complexity O(N·C_out·H·W) spatial reconstruction

|

protected |

Executes optimized convolution using img2col acceleration (High-level API)

| input | 4D input tensor node (device-to-device, non-owning, shape [N,C,H,W]) |

| outChannels | Output feature map count (device-to-device, C_out ≥ 1) |

| kernelHeight | Vertical filter dimension (device-to-device, K_h ≥ 1) |

| kernelWidth | Horizontal filter dimension (device-to-device, K_w ≥ 1) |

| stride | Convolution step size (device-to-device, S ≥ 1) |

| padding | Zero-padding size (device-to-device, P ≥ 0) |

| bias | Enable bias addition (device-to-device, default=true) |

H_out = floor( (H + 2P - K_h) / S ) + 1 W_out = floor( (W + 2P - K_w) / S ) + 1

@complexity O(N·C_out·K_h·K_w·C·H_out·W_out) computational complexity

|

protected |

Provides zero-overhead tensor passthrough for inference outputs.

| input | Source tensor node (device-to-device, non-owning, any shape) |

@complexity O(1) tensor reference operation (zero data copy)

Applies Exponential Linear Unit activation (Mid-level API)

| input | Feature node (device-to-device, non-owning) |

| alpha | Saturation coefficient (device-to-device, α > 0) |

@complexity O(n) conditional exponential operations

| Tensor & nz::Model::forward | ( | ) |

Executes full forward propagation through computation graph.

@complexity O(n) where n = number of computation graph nodes

| Tensor::value_type nz::Model::getLoss | ( | ) | const |

Retrieves scalar loss value from last forward pass.

Computes global average pooling over spatial dimensions.

| input | 4D tensor node (device-to-device, non-owning, shape [N,C,H,W]) |

@complexity O(N·C·H·W) summation operations

Computes global maximum pooling over spatial axes.

| input | 4D tensor node (device-to-device, non-owning, shape [N,C,H,W]) |

@complexity O(N·C·H·W) search operations

Applies piecewise linear sigmoid approximation (Mid-level API)

| input | Feature node (device-to-device, non-owning) |

| alpha | Slope parameter (device-to-device, typical range: 0.2) |

| beta | Offset parameter (device-to-device, typical range: 0.5) |

@complexity O(n) element-wise linear operations

Applies hardware-efficient swish activation (Mid-level API)

| input | Feature node (device-to-device, non-owning) |

| alpha | Slope parameter (device-to-device, typical: 1/6) |

| beta | Offset parameter (device-to-device, typical: 0.5) |

@complexity O(n) element-wise operations (two linear + multiplication)

|

protected |

(Low-level) Image-to-column transformation primitive

| input | 4D tensor node (device-to-device, non-owning, shape [N,C,H,W]) |

| kernelHeight | Filter height (device-to-device, K_h ≥ 1) |

| kernelWidth | Filter width (device-to-device, K_w ≥ 1) |

| stride | Convolution step size (device-to-device, S ≥ 1) |

| padding | Zero-padding size (device-to-device, P ≥ 0) |

Output(n,1,hw_out,ckk) = Input(n,c, floor(hw_out / W_out) * S - P + floor(ckk / (C*K_h)), (hw_out % W_out) * S - P + (ckk % K_h) ) Where:

@complexity O(N·C·K_h·K_w·H_out·W_out) memory reorganization

Applies Leaky Rectified Linear Unit activation (Mid-level API)

| input | Feature node (device-to-device, non-owning) |

| alpha | Negative slope coefficient (device-to-device, range: 0 < α < 1) |

@complexity O(n) conditional element-wise operation

Implements fully-connected layer transformation (Top-level API)

| input | Input feature node (device-to-device, non-owning) |

| outSize | Output feature dimension (device-to-device) |

@complexity O(outSize * inputSize) parameter initialization + O(1) node insertion

|

protected |

Performs 2D maximum pooling operation.

| input | 4D tensor node (device-to-device, non-owning, shape [N,C,H,W]) |

| poolSize | Spatial window size (device-to-device, K ≥ 1) |

| stride | Window traversal step (device-to-device, S ≥ 1) |

| padding | Zero-padding extent (device-to-device, P ≥ 0) |

@complexity O(N·C·H_out·W_out·K²) comparisons

Establishes Mean Squared Error loss node as computational graph terminal.

| input | Prediction tensor node (device-to-device, non-owning, shape [N,*]) |

| target | Ground truth tensor node (device-to-device, non-owning, shape [N,*]) |

ℒ_MSE = (1/K) * ∑_{i=1}^K (input_i - target_i)^2 Where K = numel(input)

@complexity O(K) parallel operations where K = total elements

Creates matrix multiplication node in computation graph (Low-level API)

| lhs | Left matrix node (device-to-device, non-owning) |

| rhs | Right matrix node (device-to-device, non-owning) |

@complexity O(1) node creation + O(α(n)) graph insertion

Applies Rectified Linear Unit activation (Mid-level API)

| input | Feature node (device-to-device, non-owning) |

@complexity O(n) element-wise operation (n = tensor elements)

|

protected |

Modifies tensor dimensions while preserving data (Low-level API)

| input | Source tensor node (device-to-device, non-owning) |

| shape | Target dimension specification (device-to-device) |

@complexity O(1) view creation + O(α(n)) graph update

Applies logistic sigmoid activation (Mid-level API)

| input | Feature node (device-to-device, non-owning) |

@complexity O(n) element-wise exponential + division

Applies channel-wise probability normalization (High-level API)

| input | Logits node (device-to-device, non-owning) |

@complexity O(n) exponential operations + O(C) reduction per channel

Creates subtraction operation node in computation graph (Low-level API)

| lhs | Left operand node (device-to-device, non-owning) |

| rhs | Right operand node (device-to-device, non-owning) |

@complexity O(1) node creation + O(α(n)) graph insertion

Applies self-gated swish activation (Mid-level API)

| input | Feature node (device-to-device, non-owning) |

@complexity O(n) element-wise operations (sigmoid + multiplication)

Applies hyperbolic tangent activation (Mid-level API)

| input | Feature node (device-to-device, non-owning) |

@complexity O(n) element-wise exponential operations

|

protected |

(Low-level) Batch expansion primitive for singleton tensors

| input | Source tensor node (device-to-device, non-owning, must have batch=1) |

| shape | Target shape specification (device-to-device, NCHW format) |

Operates by replicating the singleton batch dimension N times according to:

@complexity O(N·C·H·W) memory copy operations (N = target batch size)

Definition at line 204 of file Model.cu.

| void nz::Model::update | ( | opt::Optimizer * | optimizer | ) | const |

Applies parameter updates using attached optimization strategy.

| optimizer | Optimization algorithm instance (device-to-device) |

Definition at line 20 of file Model.cu.

|

related |

Serializes neural network computation graph structure to output stream.

| os | Output stream for graph representation (host-to-device) |

| model | Model instance to visualize (device-to-host) |

Implements graph structure serialization by recursively traversing the computation graph. The formatted output includes: