|

NeuZephyr

Simple DL Framework

|

|

NeuZephyr

Simple DL Framework

|

Centralized CUDA stream and resource management system with automatic dependency tracking. More...

Public Member Functions | |

| ~StreamManager () | |

| Destructor for the StreamManager class. | |

| void | malloc (T **data, const size_t size) |

| Asynchronously allocates device memory for type-specific data with stream-ordered dependency tracking. | |

| void | free (T *data) |

| Frees the CUDA device memory pointed to by the given pointer. | |

| void | freeHost (T *data) |

| Frees the pinned host memory pointed to by the given pointer. | |

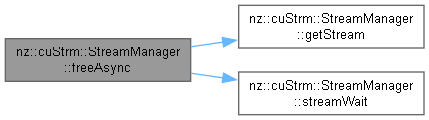

| void | freeAsync (T *data) |

| Asynchronously frees the CUDA device memory pointed to by the given pointer. | |

| void | memset (T *data, const int value, const size_t count) |

| Asynchronously sets a block of CUDA device memory to a specified value. | |

| void | memcpy (T *dst, T *src, const size_t size, const cudaMemcpyKind kind) |

| Asynchronously copies data between CUDA device and host memory based on the specified memory copy kind. | |

| template<typename F , typename... Args> | |

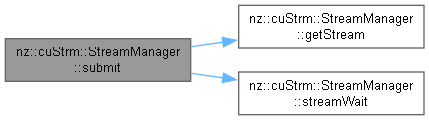

| void | submit (F func, dim3 grid, dim3 block, size_t shared, T *odata, T *idata, Args... args) |

| Asynchronously submits a CUDA kernel with stream-ordered dependency management. | |

| void | sync () const |

| Synchronizes all CUDA streams in the stream pool by blocking the host thread. | |

| void | syncData (T *data) |

| Synchronizes host thread with completion events for a specific data object. | |

| void | randomize (T *data, size_t size, size_t seed, curandRngType_t rngType) |

| Generates uniformly distributed random numbers on GPU using CURAND. | |

| cudaStream_t | getStream () |

| Acquires CUDA stream from pool using round-robin scheduling. | |

| void | streamWait (T *data, cudaStream_t stream) |

| Synchronizes CUDA stream execution until data writes complete. | |

| void | recordData (T *data, cudaStream_t stream) |

| Records write completion event for asynchronous data operations. | |

Static Public Member Functions | |

| static StreamManager & | Instance () |

| Returns a reference to the singleton instance of the StreamManager. | |

Centralized CUDA stream and resource management system with automatic dependency tracking.

malloc/mallocAsync: Stream-ordered memory allocationfree/freeAsync: Type-specific deallocation with safety checkssubmit* methods supporting 1-4 outputs and mixed data typessync/syncData: Full pipeline or data-specific synchronizationrandomize: Managed CURAND initialization and executionThis singleton class implements a high-level abstraction layer for CUDA concurrency management, combining stream scheduling, event-based dependency tracking, and resource lifecycle management into a unified interface. As the core of NVIDIA GPU task scheduling infrastructure, it enforces strict execution ordering constraints while maximizing concurrent throughput.

@design

Implements lightweight load balancing through cyclic stream allocation:

maxStream non-blocking CUDA streams at constructionStandardizes all GPU operations through a four-stage protocol:

Extends EventPool's data-event binding with type-aware management:

Definition at line 131 of file StreamManager.cuh.

|

inline |

Destructor for the StreamManager class.

This destructor is responsible for cleaning up the resources used by the StreamManager. It first synchronizes all the streams in the stream pool, then destroys each CUDA stream in the pool, and finally resets the event pool.

Memory Management Strategy:

streamPool are explicitly destroyed using cudaStreamDestroy, which releases the resources associated with these streams.eventPool is reset, which should release any resources held by the event pool.Exception Handling Mechanism:

cudaStreamDestroy can return an error code indicating a failure to destroy the stream. These errors are not explicitly handled in this destructor, but it is assumed that the calling code or the CUDA runtime will handle such errors appropriately.Relationship with Other Components:

sync function to synchronize the streams before destroying them. It also interacts with the cudaStreamDestroy function from the CUDA library to release the stream resources and the reset method of the eventPool object.Definition at line 180 of file StreamManager.cuh.

|

inline |

Frees the CUDA device memory pointed to by the given pointer.

| data | A pointer to the CUDA device memory to be freed (device-to-host). |

This function is responsible for releasing the CUDA device memory pointed to by data. Before freeing the memory, it calls the syncData function to ensure that any pending data synchronization operations are completed. The cudaFree function from the CUDA library is then used to release the device memory.

Memory Management Strategy:

cudaFree, the memory pointed to by data is released and should not be accessed further.Exception Handling Mechanism:

cudaFree can return an error code indicating a failure to free the memory. These errors are not explicitly handled in this function, but it is assumed that the calling code or the CUDA runtime will handle such errors appropriately.Relationship with Other Components:

syncData function to synchronize the data before freeing the memory. It also interacts with the cudaFree function from the CUDA library.data points to valid CUDA device memory. Passing a null pointer or a pointer to non - CUDA device memory will lead to undefined behavior. Definition at line 263 of file StreamManager.cuh.

|

inline |

Asynchronously frees the CUDA device memory pointed to by the given pointer.

| data | A pointer to the CUDA device memory to be freed (device-to-host). |

This function is responsible for asynchronously releasing the CUDA device memory pointed to by data. First, it retrieves a CUDA stream using the getStream function. Then, it calls streamWait to ensure that all operations related to the data in the retrieved stream are completed. Finally, it uses cudaFreeAsync to asynchronously free the device memory in the specified stream.

Memory Management Strategy:

cudaFreeAsync is called, the memory will be released once all previous operations in the stream have completed. The memory should not be accessed after this call.Exception Handling Mechanism:

getStream, streamWait, and cudaFreeAsync can return error codes indicating failures. These errors are not explicitly handled in this function, and it is assumed that the calling code or the CUDA runtime will handle them appropriately.Relationship with Other Components:

getStream function to obtain a CUDA stream, the streamWait function to synchronize operations in the stream, and the cudaFreeAsync function from the CUDA library to perform the asynchronous memory deallocation.data points to valid CUDA device memory. Passing a null pointer or a pointer to non - CUDA device memory will lead to undefined behavior. Definition at line 325 of file StreamManager.cuh.

|

inline |

Frees the pinned host memory pointed to by the given pointer.

| data | A pointer to the pinned host memory to be freed (host-to-host). |

This function is designed to release the pinned host memory allocated by CUDA. Before freeing the memory, it invokes the syncData function to make sure that all data synchronization operations related to this memory are finished. Subsequently, it uses the cudaFreeHost function from the CUDA library to free the pinned host memory.

Memory Management Strategy:

cudaFreeHost is called, the memory pointed to by data is released and should no longer be accessed.Exception Handling Mechanism:

cudaFreeHost may return an error code if it fails to free the memory. These errors are not explicitly handled within this function, and it is assumed that the calling code or the CUDA runtime will deal with such issues appropriately.Relationship with Other Components:

syncData function for data synchronization. Additionally, it interacts with the cudaFreeHost function from the CUDA library to perform the actual memory deallocation.data points to valid pinned host memory allocated by CUDA. Passing a null pointer or a pointer to non - pinned host memory will result in undefined behavior.Definition at line 299 of file StreamManager.cuh.

|

inline |

Acquires CUDA stream from pool using round-robin scheduling.

This function:

Definition at line 799 of file StreamManager.cuh.

|

inlinestatic |

Returns a reference to the singleton instance of the StreamManager.

This function implements the singleton pattern for the StreamManager class. It ensures that only one instance of the StreamManager is created throughout the program's lifetime. The instance is created with initial parameters 16 and 128. Memory management of the instance is handled automatically by the static keyword, which means the instance is created on the first call to this function and destroyed when the program terminates. There is no specific exception handling mechanism for this function as it is a simple singleton accessor. It serves as a central point of access for other components to interact with the StreamManager instance.

Definition at line 154 of file StreamManager.cuh.

|

inline |

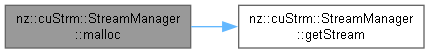

Asynchronously allocates device memory for type-specific data with stream-ordered dependency tracking.

| data | Double pointer to device memory (host-to-device parameter). Receives the allocated memory address.

|

| size | Number of elements to allocate (host-to-device parameter)

|

This method implements a stream-ordered memory allocation workflow:

The allocation operation becomes visible to subsequent operations through:

| No | explicit exceptions, but CUDA errors can be checked using cudaGetLastError() |

Definition at line 230 of file StreamManager.cuh.

|

inline |

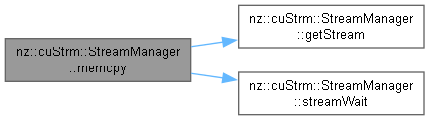

Asynchronously copies data between CUDA device and host memory based on the specified memory copy kind.

| dst | A pointer to the destination memory (memory flow depends on kind). |

| src | A pointer to the source memory (memory flow depends on kind). |

| size | The number of bytes to copy. |

| kind | The type of memory copy operation (cudaMemcpyKind). This determines the direction of the memory transfer (e.g., host - to - device, device - to - host, etc.). |

This function is responsible for performing an asynchronous memory copy operation. It first retrieves a CUDA stream using the getStream function. Then, it waits for all previous operations related to both the source (src) and destination (dst) memory in the retrieved stream to complete by calling streamWait twice. After that, it uses cudaMemcpyAsync to asynchronously copy the specified number of bytes from the source to the destination memory according to the given kind in the retrieved stream. Finally, it records the data operation in the eventPool for future reference.

Memory Management Strategy:

cudaMemcpyAsync operation. The memory should not be accessed until the cudaMemcpyAsync operation has completed. The eventPool can be used to check the completion status.Exception Handling Mechanism:

getStream, streamWait, cudaMemcpyAsync, and the operations related to eventPool can return error codes indicating failures. These errors are not explicitly handled in this function, and it is assumed that the calling code or the CUDA runtime will handle them appropriately.Relationship with Other Components:

getStream function to obtain a CUDA stream, the streamWait function to synchronize operations in the stream, the cudaMemcpyAsync function from the CUDA library to perform the asynchronous memory copy, and the eventPool object to record the data operation.src and dst pointers point to valid memory locations appropriate for the specified cudaMemcpyKind. Passing null pointers or pointers to incorrect memory types will lead to undefined behavior. Definition at line 391 of file StreamManager.cuh.

|

inline |

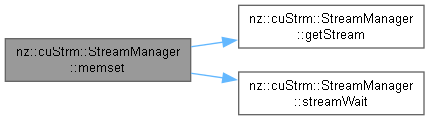

Asynchronously sets a block of CUDA device memory to a specified value.

| data | A pointer to the CUDA device memory to be set (device-to-host). |

| value | The value to set each byte of the memory block to. |

| count | The number of bytes to set. |

This function is designed to asynchronously initialize a block of CUDA device memory to a given value. It first retrieves a CUDA stream using the getStream function. Then, it calls streamWait to ensure that all previous operations related to the data in the retrieved stream are completed. After that, it uses cudaMemsetAsync to asynchronously set the specified number of bytes in the device memory to the given value. Finally, it records the data operation in the eventPool for future reference.

Memory Management Strategy:

cudaMemsetAsync operation. The memory should not be accessed until the cudaMemsetAsync operation has completed. The eventPool can be used to check the completion status.Exception Handling Mechanism:

getStream, streamWait, cudaMemsetAsync, and the operations related to eventPool can return error codes indicating failures. These errors are not explicitly handled in this function, and it is assumed that the calling code or the CUDA runtime will handle them appropriately.Relationship with Other Components:

getStream function to obtain a CUDA stream, the streamWait function to synchronize operations in the stream, the cudaMemsetAsync function from the CUDA library to perform the asynchronous memory setting, and the eventPool object to record the data operation.data points to valid CUDA device memory. Passing a null pointer or a pointer to non - CUDA device memory will lead to undefined behavior. Definition at line 360 of file StreamManager.cuh.

|

inline |

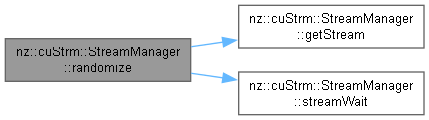

Generates uniformly distributed random numbers on GPU using CURAND.

| data | Device pointer to allocated memory for random numbers |

| size | Number of elements to generate |

| seed | Seed value for pseudo-random generator |

| rngType | CURAND RNG algorithm type (e.g., CURAND_RNG_PSEUDO_XORWOW) |

This function:

Definition at line 757 of file StreamManager.cuh.

|

inline |

Records write completion event for asynchronous data operations.

| T | Data type (inferred from pointer) |

| data | Device memory pointer tracking write completion |

| stream | CUDA stream where write operation occurred |

This method:

Definition at line 886 of file StreamManager.cuh.

|

inline |

Synchronizes CUDA stream execution until data writes complete.

| T | Data type (inferred from pointer) |

| data | Device memory pointer with pending write operations |

| stream | CUDA stream to apply synchronization constraints |

This function:

Definition at line 840 of file StreamManager.cuh.

|

inline |

Asynchronously submits a CUDA kernel with stream-ordered dependency management.

| F | CUDA kernel function type (automatically deduced) |

| Args | Variadic template parameter types (automatically deduced) |

| func | CUDA kernel function pointer (host-to-device)

|

| grid | Grid dimension configuration (host-to-device)

|

| block | Thread block dimension configuration (host-to-device)

|

| shared | Dynamic shared memory size in bytes (host-to-device)

|

| odata | Output data device pointer (device-to-device)

|

| idata | Input data device pointer (device-to-device)

|

| args | Additional kernel arguments (host-to-device)

|

This method implements full lifecycle management for stream-ordered kernel execution:

Dependency management mechanisms:

Definition at line 471 of file StreamManager.cuh.

|

inline |

Synchronizes all CUDA streams in the stream pool by blocking the host thread.

This function performs a full barrier synchronization across all managed CUDA streams in the stream pool. It sequentially waits for the completion of all operations enqueued in every stream, ensuring no pending GPU work remains after return.

| No | explicit exceptions thrown. CUDA runtime errors may surface through:

|

Definition at line 671 of file StreamManager.cuh.

|

inline |

Synchronizes host thread with completion events for a specific data object.

| data | Device data pointer to synchronize (device-to-host)

|

This function provides targeted synchronization for a specific data object by:

Definition at line 714 of file StreamManager.cuh.